MS-01

I was interested in setting up a small Proxmox HA cluster using Ceph to tick the shared data store requirement. I didn’t want used enterprise gear but needed fast networking. The Minisforum MS-01 wasn’t out at the time I was looking but was worth the wait. Anything else would have cost much more and so far they have worked very well for my needs.

Part List

Itemized

Prices are subject to change with sales or things getting old.

| Item | Price | Quantity | Total | Note |

| MS-01 13900H Barebone | $679 | 3 | $2037 | Can not get on amazon |

| Crucial RAM 96GB | $300 | 3 | $900 | Not officially supported but works great! |

| Crucial T500 1TB NVMe | $100 | 3 | $300 | For Proxmox OS |

| Samsung PM983a | $159 | 6 | $954 | For Ceph |

| USB4 Cables | $16 | 3 | $48 | Only needed for TB networking |

| SFP+ DAC | $20 | 3 | $60 | 3m was too long |

So all in all the project was looking to cost $4,300 which was a significant investment for a high risk new product with a laptop CPU. However, it feels less expensive in the moment since the cost is distributed across vendors!

Description

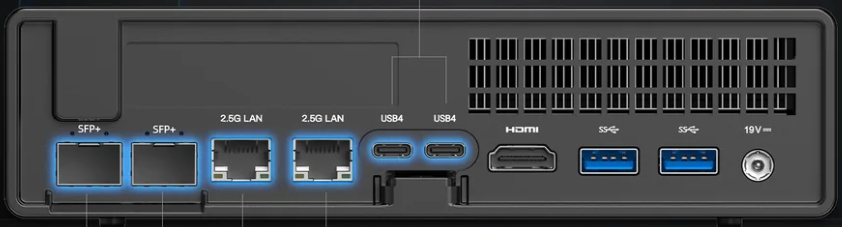

Minisforum MS-01

The Minisforum MS-01 was arguably the most important part as it enabled the rest of the spending. I went with the 13900H Barebone model because it was the only one available at the time but I don’t regret it.

The MS-01 was a bit larger than most of the NUCs you’d see but could fit 3 NVMes and a 16 slot x8 speed PCIE card (one slot wide).

It had two SFP+ ports, two USB4, and two 2.5Gbe which adds up to 65Gbps of networking capabilities (kinda)!

The initial goal was to use the 20Gb Thunderbolt network for Ceph’s private network and SFP+ for it’s public plus have other adapters for other things the VMs would require. Ultimately I switched the Ceph private network over to SFP+ because it I wanted more than three nodes and 10Gb was fast enough for my needs.

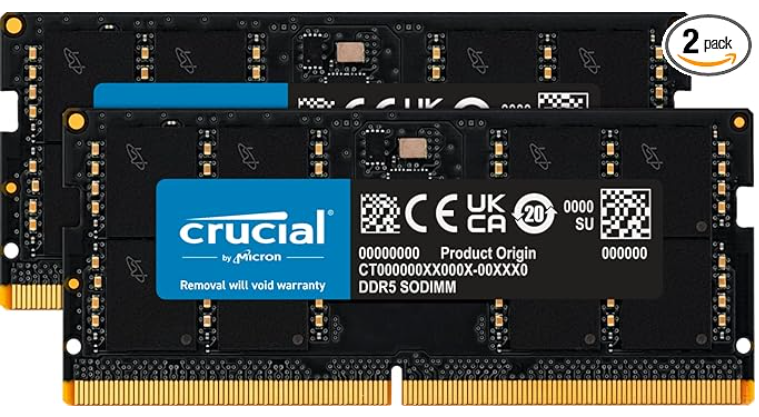

Crucial DDR5 96GB Laptop RAM

Officially the MS-01 supports 64GB of RAM but strangers on the internet said 96GB would be fine and so far they’ve been dead on. With 20 threads from the i9-13900H I figured more RAM wouldn’t hurt.

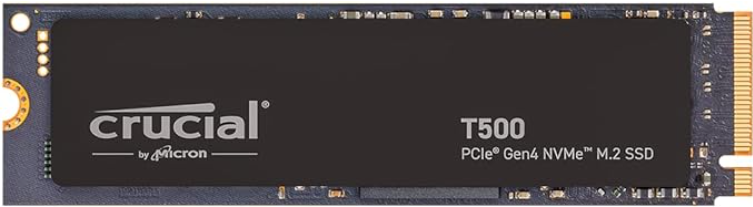

Crucial T500 1TB Gen4 NVMe

The name of the game for these M.2’s were something cheap that I could install Proxmox on and use for a select few VMs that wouldn’t need to be highly available like k8s nodes which will already re-balance pods if any go down.

Samsung PM983a NVMe PCIe M.2 22110 1.88TB

This was a tricky one but things have been looking good. I wanted an enterprise M.2 with PLP for Ceph since it’s what Ceph recommends and I’m already going through a lot of trouble to make a highly available cluster that it’s not worth saving some money to end up with a corrupt OSD after a power failure.

eBay was the only option for these but everything has been fine so far and I’ve since purchased four more.

Cables

USB4 cables on Amazon are always a risky click unless you go with a brand you can trust. I tent to stick with Anker, Cable Matters, or Monoprice. Ugreen seems to be gaining popularity now for things and I’ve grabbed a few of theirs too.

Now a days I get most of my DAC and ethernet cables from Unifi. I did purchase a 10Gtek DAC when their 3m ones were sold out which was fine but I’m usually on there blowing the budget anyway so I throw in a few cables.

Assembly

Unboxing

I captured enough pictures to “unbox” this live so bare with me. I put together a nice loot pile before starting the lob:

The device came nicely packaged in a little cube:

Opening it up reveled a nicely wrapped mini workstation:

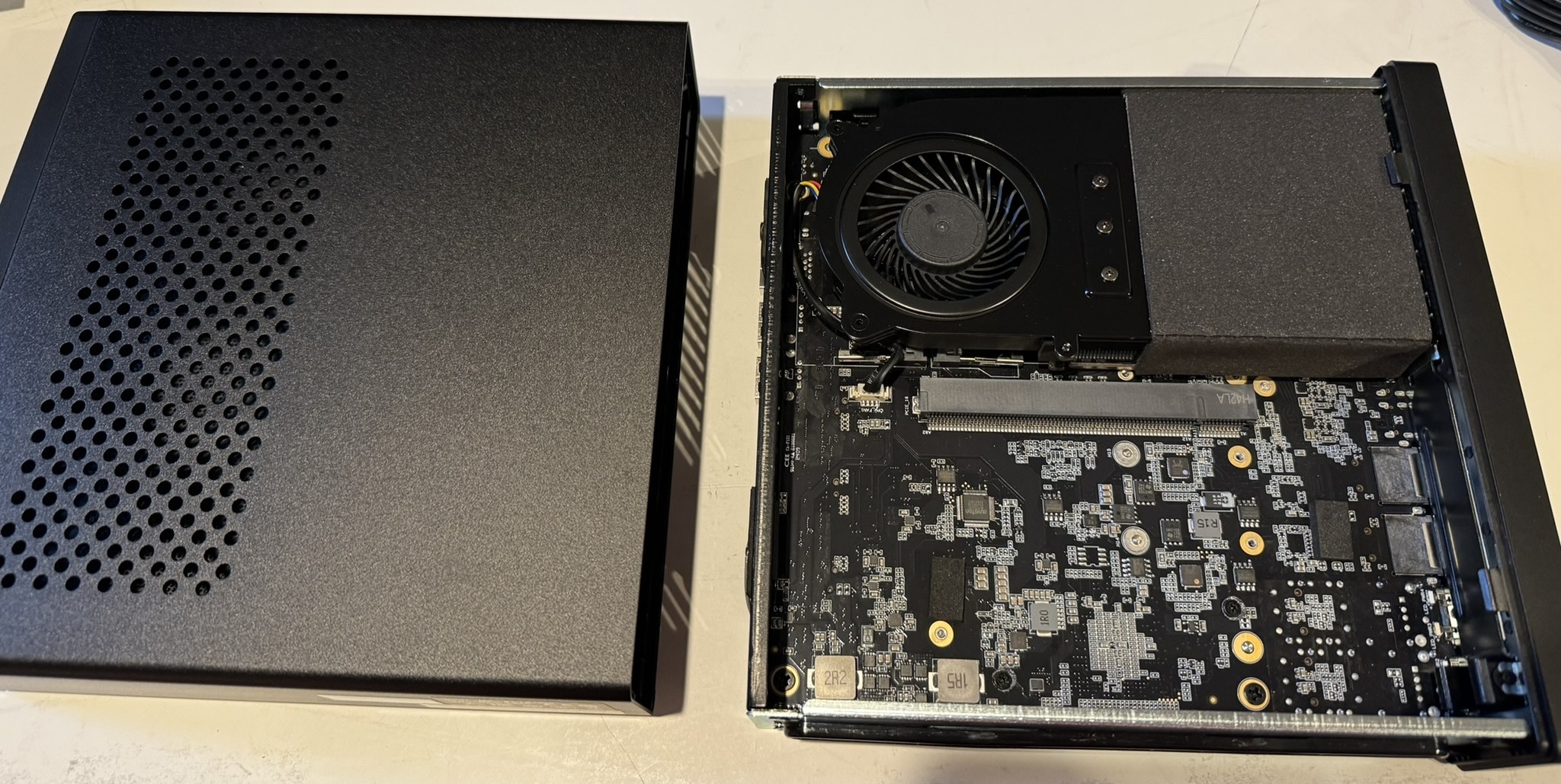

Next came cracking it open. The lid slid off easily:

Note all your ports say safe, there is no panel here that comes off:

RAM

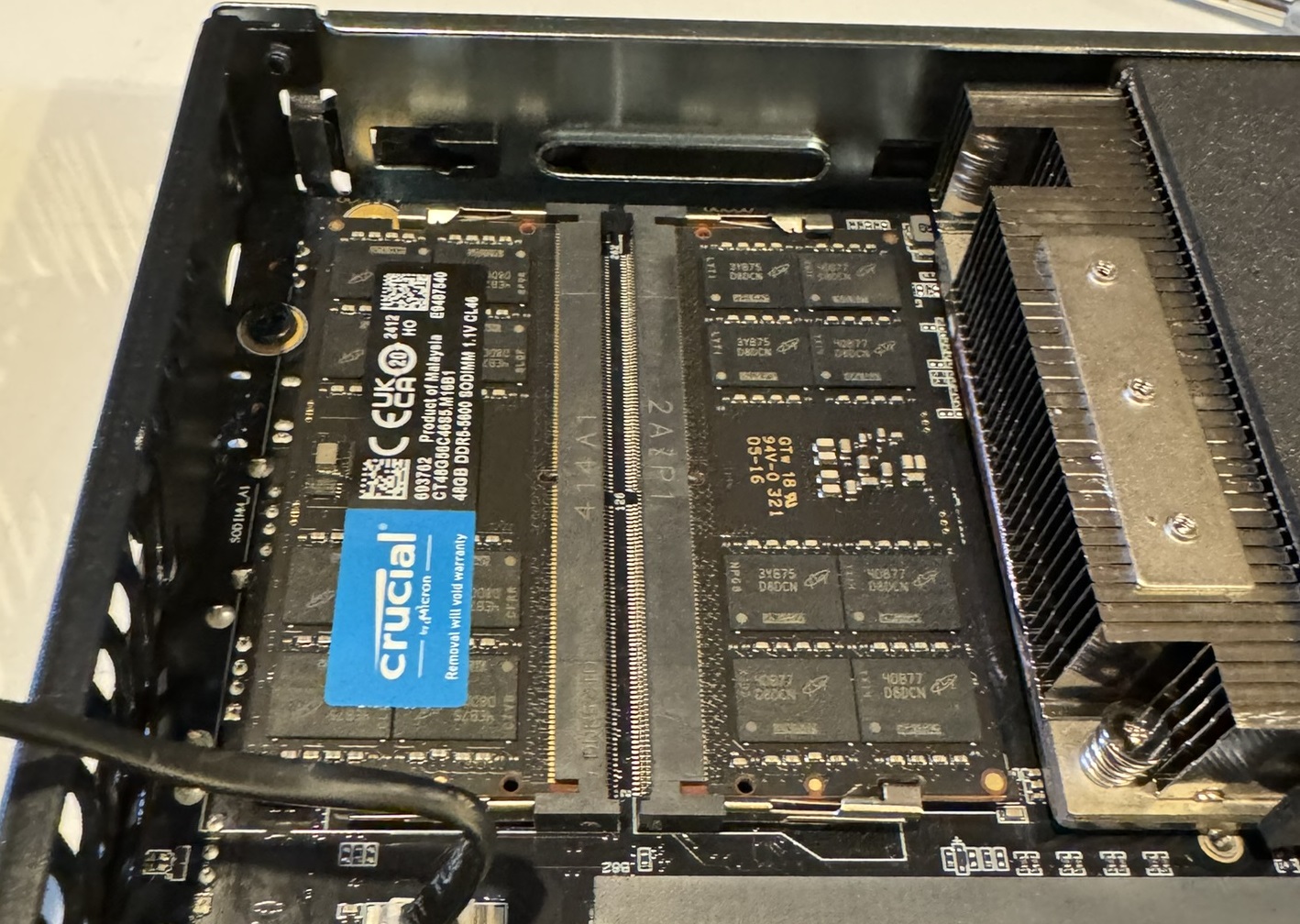

First up was the 96GB Crucial RAM:

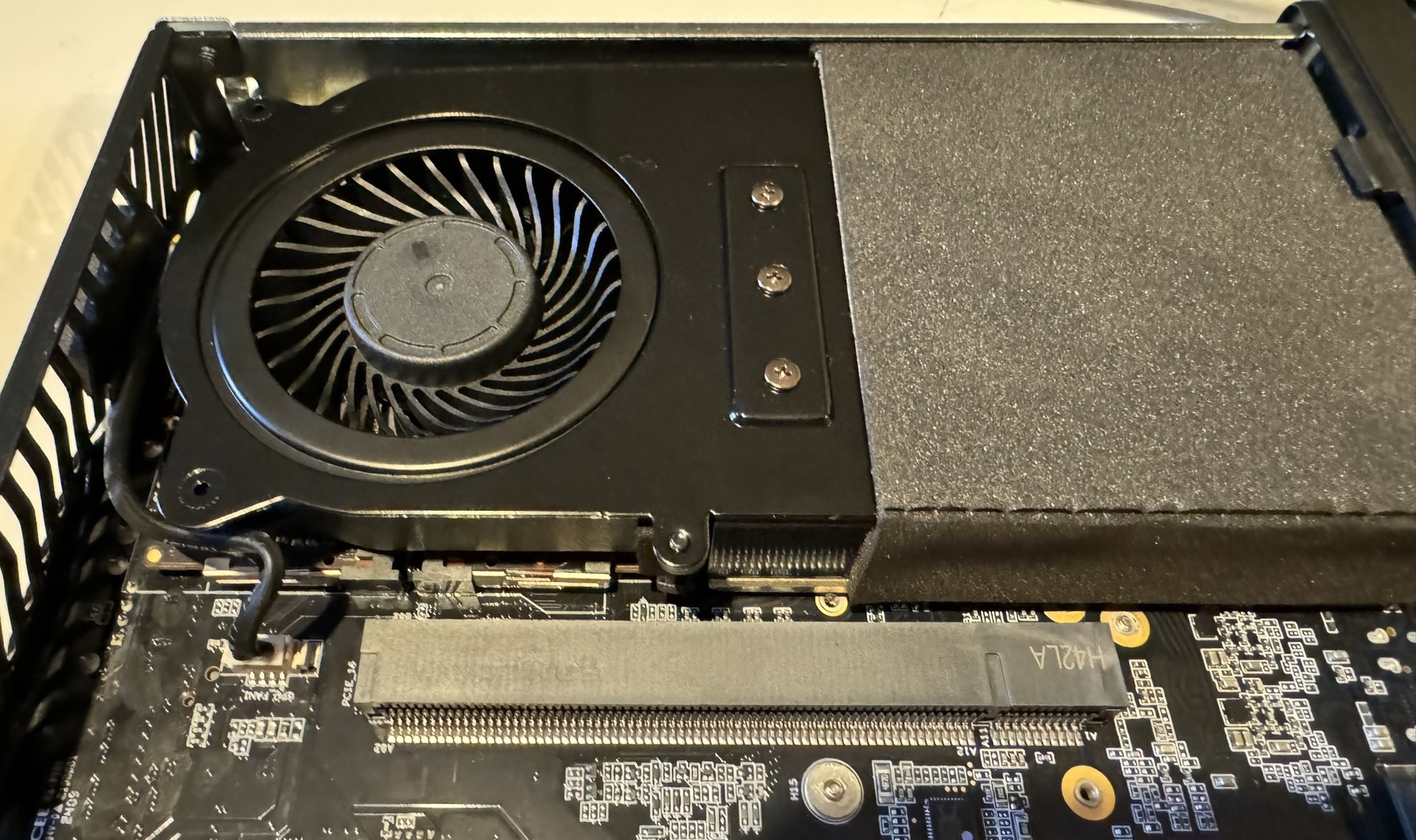

This required popping off the CPU cooler fan which had the RAM underneath:

It was a bit awkward to pop off, took a little jiggling, but once off the sockets were easily accessible:

And at the right angle (and flipped correctly) the RAM modules slid in no problem:

Then some more jiggling and screwing in the tiny screws got things back together:

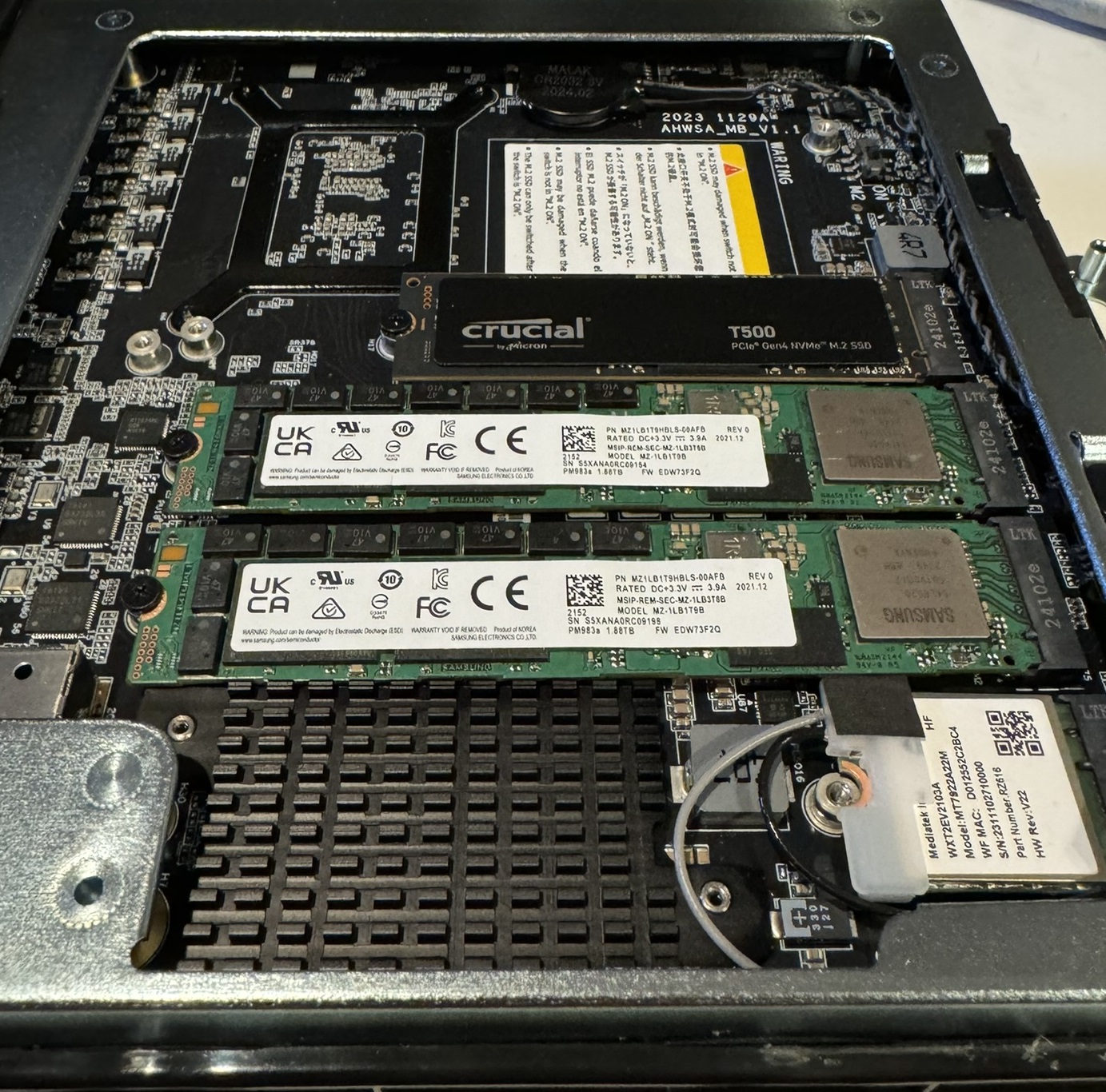

NVMes

Next required flipping the device over to get at the three M.2 slots. Not these screws worked better with a screwdriver one size down from the CPU cooler.

Before sticking in the M.2s I removed a the stand offs that were for shorter M.2s. With a little pressure I could get the screw and standoff out in together. However, I didn’t realize that initially and used some needle nose pliers for the standoffs after removing the screw.

First to go in was the Crucial T500:

Nothing crazy there:

Next were the Samsung PM983a’s for Ceph:

Those went in easily as well:

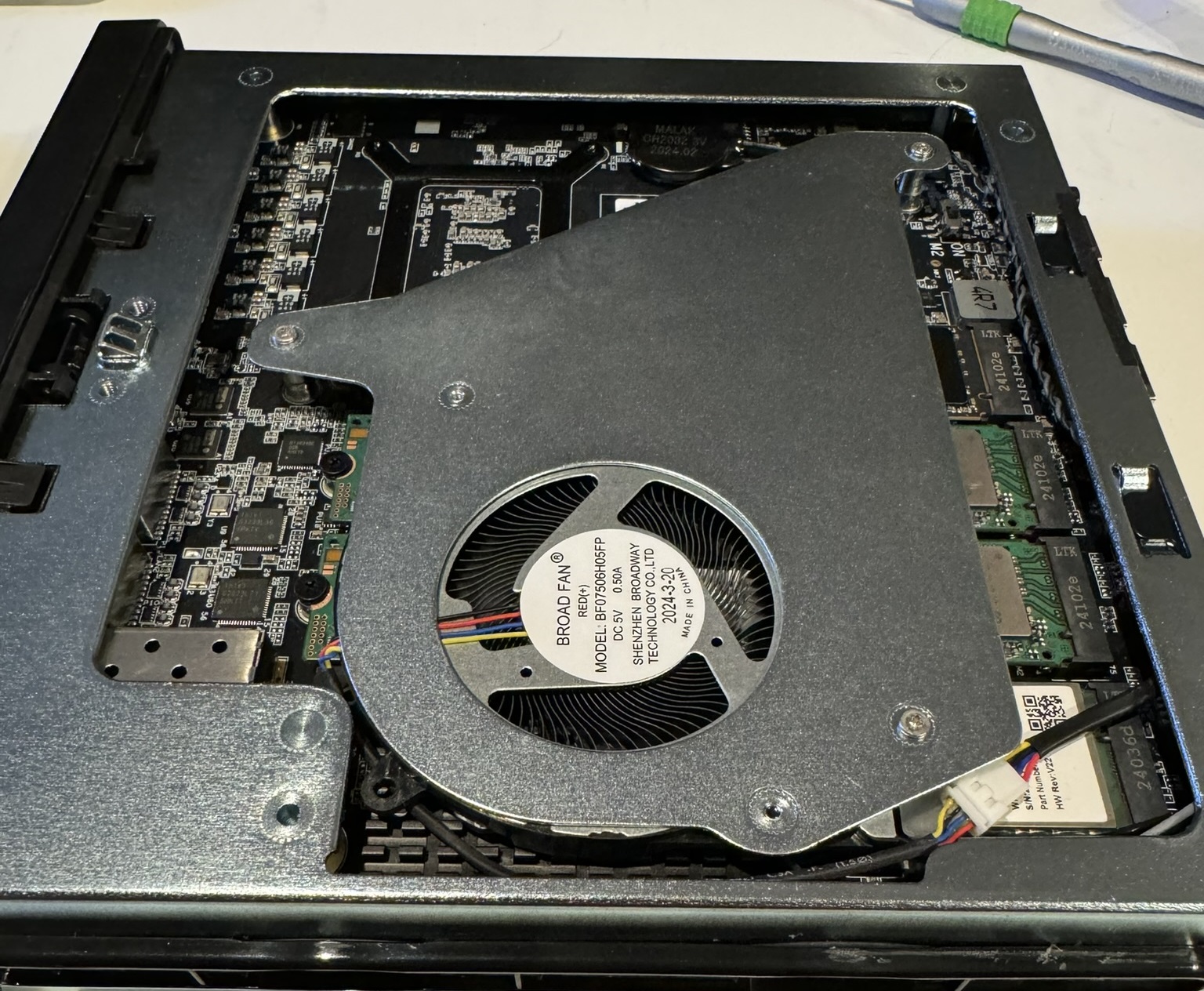

Finally, securing the rear cooler:

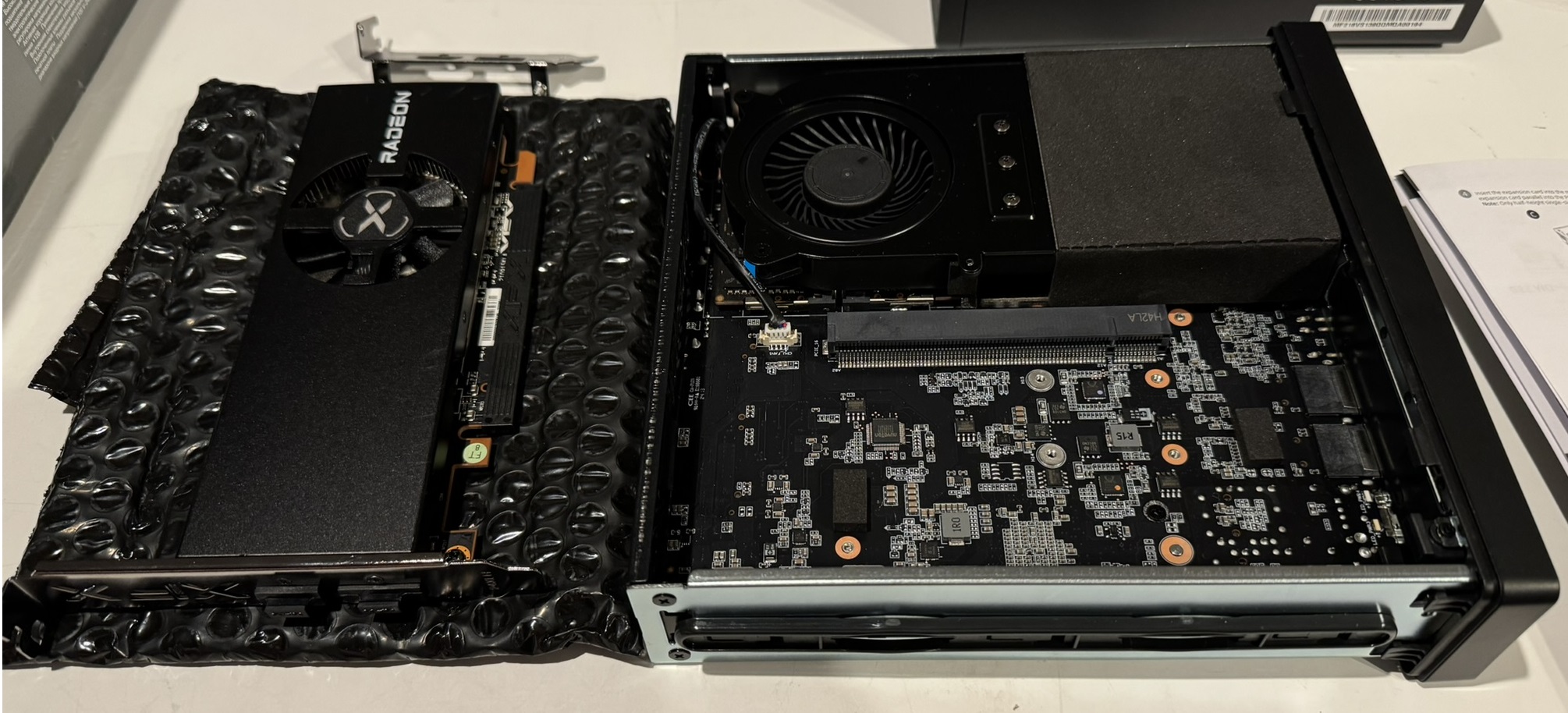

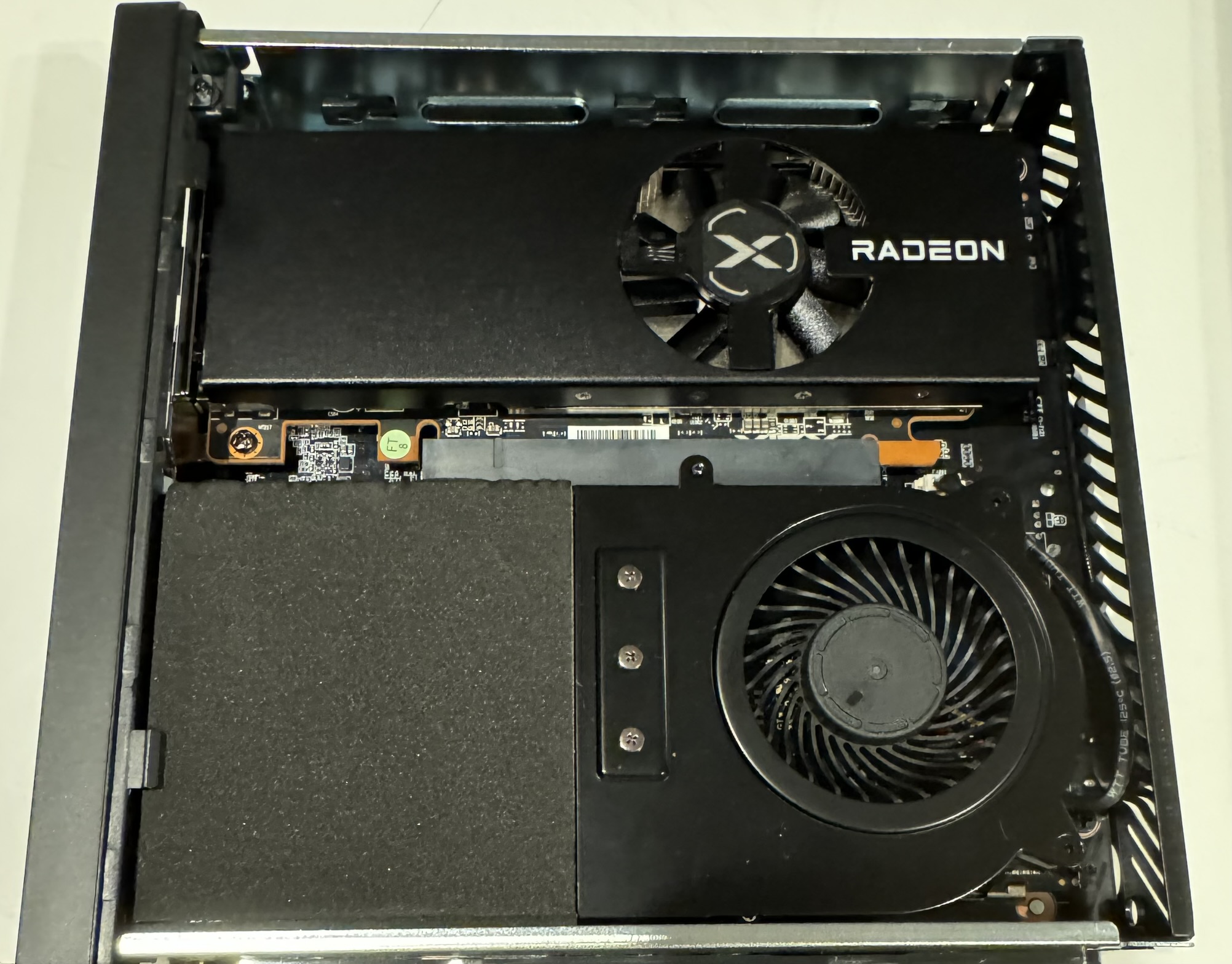

GPU (Optional)

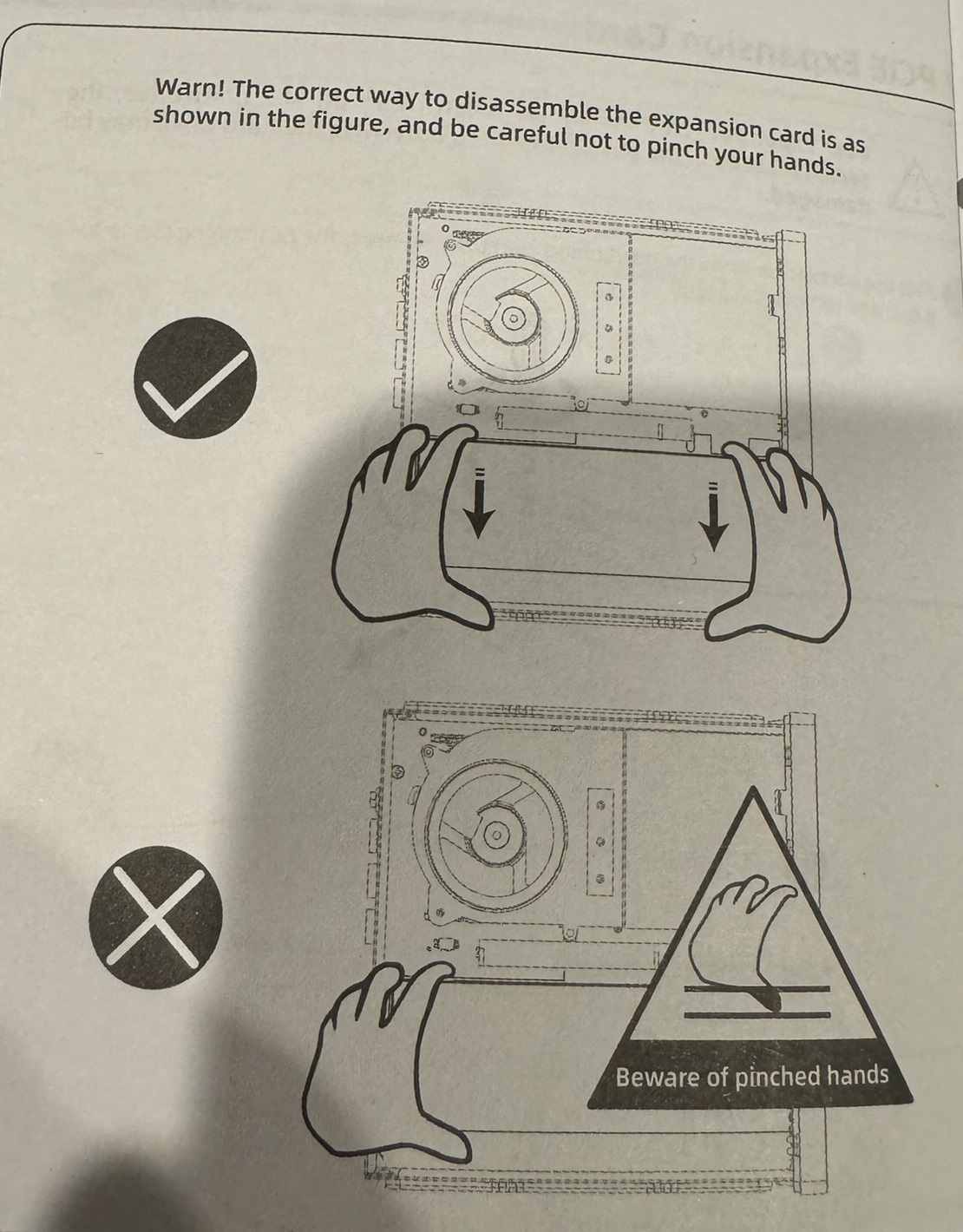

I decided to install an Radeon RX 6400 in one of the nodes to get a feel for how it’d perform, fit, and how hot it’d run. Before getting started I noticed I was in grave danger:

The GPU I selected was a XFX RX 6400 which was the only thing I could find that wasn’t VGA port ancient that would fit in the MS-01:

Still a monster compared to the little workstation it was going to live inside of:

But before it could go in I had to get the half slot faceplate on. This turned out requiring a lot more fiddling than I was use to for network cards and HBAs. The entire cooler and fan had to be unscrewed before you could get at the screws for the faceplate:

But things were looking good once it was all back together:

And finally the RX 6400 was snug in the MS-01 and nobody lost a finger!

First Boot

Now we were all set to boot up and install Proxmox. First boot takes a while so don’t get impatient for it to post:

I went through the motions of installing Proxmox. Make sure it detects your country and IP/domain automatically or your probably going to have to do an offline install and set up networking later. This happened to me once but rebooting squared it away.

Cluster

One MS-01 gets pretty lonely so it’s recommended you run them in a cluster:

In this install I was testing a ring network:

Wires got a bit out of control:

Finally, they found their home on a shelf in the rack:

Next Steps

For what to do now that you have some MS-01’s head over to adding a node.